Expressive Objects

Nov 2018 - Dec 2018

Human-Computer Interaction | UX Design

To explore how machines can express themselves through their inherent properties (vibration, sounds, shape, colour etc.), and parts they already have, in antithesis to integrating a screen or a speaker with a human voice with every machine or robot that is designed. The final objective was to develop such a vocabulary for machine behaviour.

Objective

The project space was multidisciplinary, with 16 people from different design majors coming together to work on this brief. The nature of the project space was such that it was highly collaborative, iterative, and involved prototyping and exploration to formulate hypotheses for the vocabulary we were trying to build. Split into teams of 2-3 people, as a class, we worked on one or two inherent properties each, and came together often to share our knowledge and build on each other's work. Throughout this project, I worked (at various intervals) with Shreya Mishra, Sucharita Premchandar and Tanay Sharma. The project was led by designer Shobhan Shah.

Approach

Skills & Tools Used

Design Research, Rapid Prototyping, User Testing, Research through Testing // Cinema 4D, Premiere Pro, Audition

A few readings and many classroom discussions later, the 16 of us came up with a small map/table to try and understand how and what humanizes an inanimate object (depicted below).

Research

Next, we looked around us and observed different inanimate objects, and explored how they could be perceived as expressive, by virtue of their inherent properties like colour, sound, movement etc.

Explore &

Observe

The rise in volume and intensity of a hairdryer makes it "seem" angry.

The rise in pitch of the sound of water being filled in a metallic bottle can indicate rising anticipation.

Further Exploration

"How would machines express themselves to each other without talking/human expressions?"

"How would machines express themselves to humans without talking/human expressions?"

These questions led to experimentation with stop motions, short films, cameras, and quick prototypes, while framing and making a short audio-visual narrative and trying to capture it in the best way possible.

A balloon that deflates in sadness, while making a forlorn sound after it "feels abandoned."

We then came together again to share our explorations. We noted any common patterns we saw in each other's work and hypothesized as to whether playing around with those parameters resulted in a desirable depiction of the emotion we had in mind.

From these discussions, we drew up a framework of expressions, which we then tried to validate by animating simple things. We created a table of expressions for eight primary emotions, and tried to define the parameters of these expressions according to speed, direction, motion/movement and time (duration).

Theory

Formulation

Graph of emotional expressions.

Table of emotions and corresponding parameters of expression.

To test this framework, each team then chose a "mode" - colour, sound, vibration, movement etc. - and used these modes to express various things. Real life (hypothetical) examples of these could be that a phone can use vibration or sound to express something, a carpet/blanket can change its shape, the colour of the walls in a room could change, devices like Google Home and Alexa could make use of simple movements to express something instead of relying only on voice.

To put this in context of our own simplistic explorations, we manipulated sounds to become shriller to convery anger or frustration, carpets crumpled up to express fear, the hands of clock ticked faster to indicate urgency etc.

We were aiming to explore the potential of objects and their different modes of communication, in order to develop a vocabulary that designers may use while designing machines now and in the future.

My teammate and I chose to work with sound. We created expressive sound-pieces by tweaking the frequency, tempo and amplitude of the sound. Doing so, we created five different sound pieces, each one depicting a different emotion.

Application

Expression - Anger (please ensure your volume is lowered for this)

Expression - Anticipation/Nagging

Expression - Excitement

Expression - Surprise

Expression - Tired

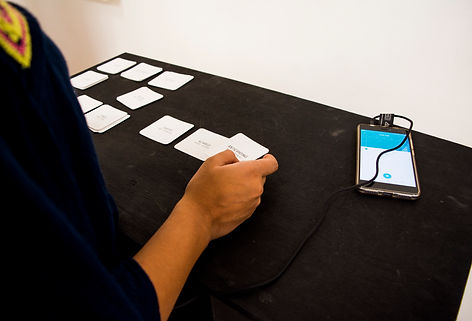

We put these five sounds in a sequence, and printed accompanying cards with the name of the emotion printed on them. Listeners had to then match the emotion to the sound that they heard - an attempt to describe what they thought the "machine" was "expressing" at that moment.

Testing

As a class, we presented our work at a public exhibition at Rangoli (Bangalore) in December '18.

The exhibition also presented us with the perfect opportunity to test our theories out with the visitors. They heard the sequence of sounds and then matched cards with the emotion they thought the machine was expressing.

We noted down the visitors' responses. The results, to a large extent, verified our hypothesis, but also showed us its limits - in terms of using "emotional" words to describe machine behaviour as there is a very subjective individual interpretation of the emotion itself, which is expressed through sound (especially in cases where the graph for two emotions varied only slightly, as it was in the case for "anger" and "surprise.")

Much of what we explored and did in this class was new to me. I had never used prototyping almost exclusively to build on an idea or a theory, and I must admit, it's quite forgiving and encouraging at the same time - making mistakes again and again as part of the process and learning from past experiences.

This class also put the future of robots and artifical intelligence into perspective. Today's market is quite saturated with robots and machines that rely on human modes of expression - words, facial features - to express something. While this is an attempt to "humanise" the machine, the flip side of that is that it can very quickly become very unnerving for many people. Perhaps using a machine's or an object's innate properties to make it "express" something could make it more approachable.